AI adoption survey 2025 by Easy Redmine: Real insights to AI maturity

Our 2025 AI adoption maturity survey cuts through the hype to show what’s really happening with AI in organisations: plenty of experimentation, little coordination, and growing security risks from unmanaged public-cloud tools. See how your organisation compares! And where the biggest gaps in AI maturity still are.

Table of contents

Survey on the adoption of AI by Easy Redmine

AI governance gap: High adoption, low control

AI adoption racing ahead of governance maturity

The warning sign: Public cloud is not that safe

Key insights from AI adoption survey

TL;DR

Easy Redmine’s Q4 2025 survey shows that while most are actively experimenting with AI, adoption is fragmented, heavily reliant on public tools, and largely unmanaged, exposing a major governance and security gap that must be closed to scale AI responsibly.

Survey on the adoption of AI by Easy Redmine

Honestly, even at Easy Redmine, we live the AI adoption to the fullest; there was a question in the air: How are clients doing in this challenge?

So in Q4 of 2025, we conducted an AI adoption survey across 36 organisations spanning the IT, public sector, manufacturing, finance and services industries. You can find the detailed methodology in the downloadable survey.

The data showed the following information about the overall AI adoption landscape of the surveyed organisations:

- 3% have no adoption (AI usage is restricted)

- 58% use AI tools individually (isolated or pilot use)

- 19% use AI features integrated into existing tools (operational integration)

- 14% have a mix of different AI adoption levels (transitional phase)

- 6% use AI to run automated workflows (AI-driven process automation)

- 0% is in transformative stage (using AI agents)

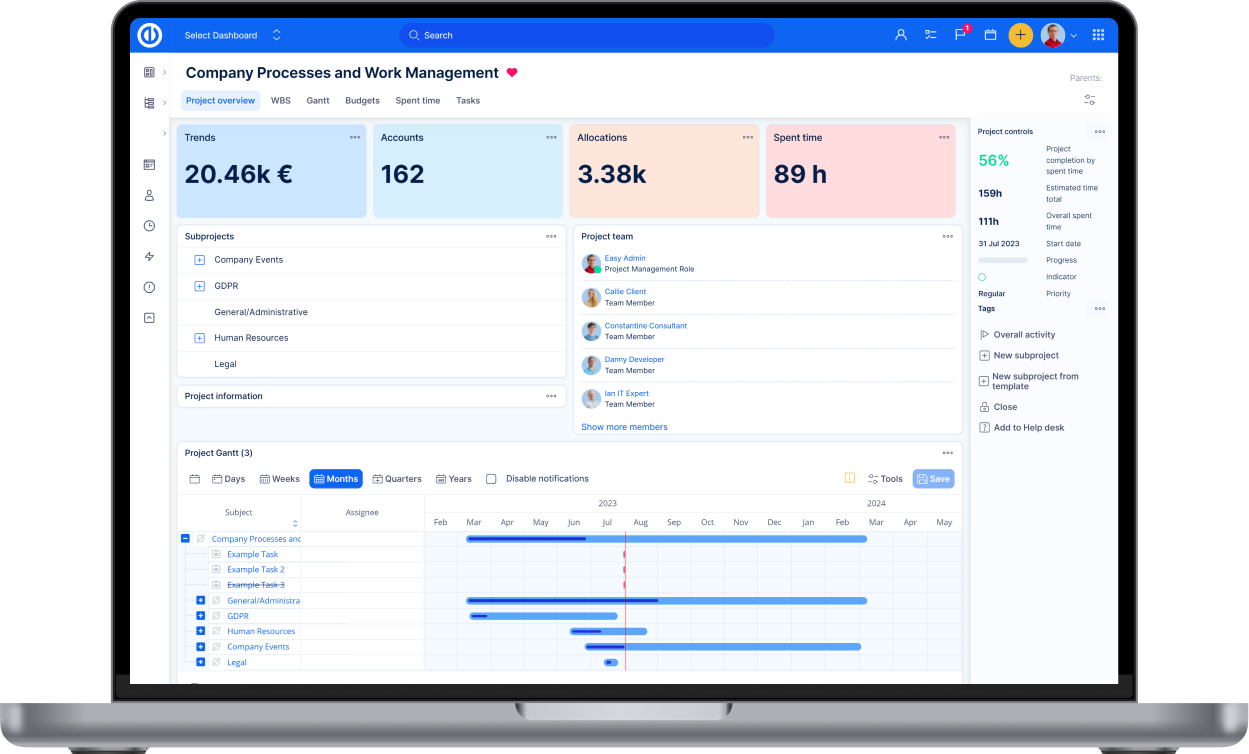

AI adoption by Easy Redmine survey in Q4 2025

More than half of the surveyed organisations (58%) are actively experimenting with AI and have built a strong internal interest, but they’re still early in their maturity.

They’ve proven AI’s potential through employee-led experimentation, yet haven’t established governance or security frameworks, systematically measured business impact, scaled beyond pilots, or unified key data sources, leaving significant room for growth and differentiation.

The fact that 14% of organisations have uneven, mixed AI adoption suggests:

- AI is being adopted from the bottom up without a clear central strategy

- AI maturity is fragmented across departments

- There is a need to unify and standardise approaches

- There is room to scale successful departmental AI initiatives across the organisation

AI is mostly used by individuals rather than being part of a coordinated company strategy. While many teams are curious and experimenting, there’s still no clear structure or official support across the whole organisation.

AI governance gap: High adoption, low control

The data reveal a clear trend — most organisations are implementing AI without the required governance foundations. To be concrete:

- 81% operate without central oversight

- 61% rely on unregulated public AI tools

- 58% use AI mainly through individual experimentation, resulting in inconsistent practices and higher risk.

In the absence of common standards or secure infrastructure, AI adoption remains informal and fragmented, constraining both security and business outcomes.

AI adoption racing ahead of governance maturity

Overall, the findings from the survey show an AI governance vacuum: AI is already embedded in day-to-day work, but control frameworks, ownership, and accountability have not caught up.

A question: “Who controls the use of AI in your team?” revealed that:

- 81% have no central control or governance over AI usage

- 14% have some guidelines but loose enforcement

- 3% have centralised management and standardised tools

- 3% block AI usage

Only 3% report having centralised management and standardised tool, and that is alarming.

Organisations urgently need to transition from informal, individual-led experimentation to centralised yet enabling governance, including clear policies, approved tools, training, and monitoring, if they are to realise AI’s benefits while managing its risks in a defensible and auditable manner.

The warning sign: Public cloud is not that safe

When we asked organisations where their AI tools are actually hosted, the responses clustered into four main categories:

- 61% rely entirely on public AI services (ChatGPT, Claude, etc.)

- 28% have AI tools embedded in core software system

- 3% use internal systems with approved AI APIs

- 8% claim no usage of AI tools

Relying on public cloud for AI means giving up control over models, data, and pipelines to providers. This increases security risks like data leaks, attacks, and limited visibility.

And as the survey revealed, many organisations use public AI tools without proper rules or oversight. This leads to serious risks around security and compliance, showing the urgent need for secure, well-managed AI systems.

Key insights from AI adoption survey

Organisations are no longer debating whether to adopt AI; the challenge has shifted to scaling it in a responsible and effective way. Those that prioritise strong governance, data readiness, and coordinated implementation will be best placed to advance toward automation and AI agents.

Also, there is clear evidence that robust AI governance is still the exception, not the norm.

What exactly is holding the companies back from AI adoption? What is the actual vision towards AI? How is AI integrated into daily work? What are our recommendations?

Get those answers in the survey that reveals even more insights about AI adoption among companies. Download it for free to get a realistic picture of AI maturity at the end of 2025. This report is designed to offer the clarity and guidance required to navigate that next stage.

Are you unsure which phase of AI maturity your company is in? Take the AI adoption test!