Yes to AI—but only if it’s secure

AI is changing how we work—but can your organisation rely on it to handle sensitive data safely? Before jumping in, discover the hidden risks most teams overlook and how to stay secure, compliant, and in control.

Table of contents

AI is here to stay: But can you trust it?

AI tools vs. data security: 55% hesitate

Why AI adoption needs data control

Five hidden AI security risks organisations often overlook

How to address the 5 hidden AI security risks

1. Prevent data exposure from public AI tools

2. Ensure transparency and explainability

3. Reduce regulatory and compliance risks

4. Limit exposure in shared cloud environments

5. Maintain human oversight and accountability

AI you can trust for your project management

TL;DR

Many organisations hesitate to adopt AI due to serious concerns around data security, regulatory compliance, and lack of control—making secure, transparent, and on-premises solutions like Easy AI by Easy Redmine is a trusted alternative.

AI is here to stay: But can you trust it?

From chatbots and assistants to full-scale project and work automation, AI is rapidly transforming how organisations operate. It’s fast, powerful, and increasingly essential—but its adoption is not without hesitation. Many businesses, particularly in regulated sectors like finance, healthcare, defence, or public services, face strict compliance demands and heightened concerns around data security, confidentiality, and accountability.

When AI systems rely on opaque algorithms or external infrastructure, the risk of misuse, data breaches, or even industrial espionage becomes a serious barrier to adoption.

AI tools vs. data security: 55% hesitate

Deloitte reported, that 55% of organisations avoid certain AI use cases due to concerns over data security and privacy. The recent boom of AI tools—often cloud-based and trained on massive datasets—has made it harder to distinguish between innovation and risk.

Why AI adoption needs data control

Even cloud giants are adjusting. Microsoft recently announced “Microsoft 365 Local,” a version of its cloud suite tailored for specific European markets, designed to address growing concerns around digital sovereignty and regulatory pressure.

With this move, Microsoft is showing that even cloud-based services need to change—by offering more control over data and local storage to meet the needs of European governments and businesses. It's a clear indication: the future of AI and cloud isn’t one-size-fits-all.

Flexibility, control, and trust are becoming just as important as functionality.

The message is clear: AI adoption should not come at the cost of data security. But identifying where the risks actually lie is the first step.

Five hidden AI security risks organisations often overlook

Here are five often-overlooked security threats that can quietly undermine your AI strategy—especially if left unaddressed.

- Data security gaps in third-party AI tools: Using consumer-facing AI tools to operate on internal or client data—even casually—can result in that data leaving your environment and being stored or processed in unknown ways.

- Lack of transparency in AI-generated outputs: Many LLMs provide answers without showing how they arrived at them. In regulated industries, this lack of transparency is not just a red flag—it’s a compliance issue. When decisions are made or supported by AI, you need an audit trail.

- Regulatory exposure (GDPR, NIS2, AI Act): The EU AI Act, GDPR, and NIS2 are tightening controls on how data is handled and who is responsible for AI-driven outcomes. Non-compliance isn’t just theoretical, it can result in serious fines and operational restrictions.

- Shared cloud environments increase your security risk: When you use public cloud services, your data often shares space with other companies’ data. This shared setup can make it easier for mistakes or breaches to happen—like someone gaining access through a misconfigured setting. A recent ransomware attack resulted in the leak of over 1,200+ AWS cloud credentials used to encrypt S3 buckets. And even if your data is encrypted, it might still pass through systems you don’t fully control.

- Lack of accountability and human control: Automated decisions without clear human oversight can lead to errors that are hard to trace or correct. Without the ability to control, verify, or override AI-generated actions, your organisation could be exposed to both operational and legal risks.

How to address the 5 hidden AI security risks

To help teams stay both innovative and compliant, we’ll explore five practical rules that every organisation should follow when deploying AI tools, especially in project and work management and data-sensitive operations:

1. Prevent data exposure from public AI tools

Avoid feeding sensitive information into public AI platforms—doing so may expose your data to unclear processing or storage practices. Some platforms, like ChatGPT, offer the option to disable training on user data, but relying on such settings still means placing trust in an external system.

For organisations with higher security requirements, it may be worth exploring alternatives like on-premises deployment, private cloud environments, or even running a language model directly within your own infrastructure. While these options require more internal capacity, they offer greater control over how data is processed and protected.

2. Ensure transparency and explainability

To build trust in AI outputs, systems must go beyond black-box behavior. Every AI-generated statement or recommendation should be supported by clear citations or references to its source data. This makes decisions understandable, traceable, and auditable—especially crucial in regulated environments or when AI supports critical operations.

3. Reduce regulatory and compliance risks

Make sure your AI tools support GDPR, NIS2, ISO 27001 or other relevant regulations. Look for vendors who offer EU-hosted or sovereign cloud options, and can provide clear documentation and audit trails. Proactively building compliance into your AI strategy can prevent future headaches as regulations tighten.

4. Limit exposure in shared cloud environments

While public cloud AI tools are widely available, they come with shared infrastructure and therefore increased risk.

Whenever possible, opt for dedicated cloud environments, or better yet, on-premises solutions for high-risk or regulated data. Even within cloud setups, prioritise encryption, access controls, and vendor transparency.

5. Maintain human oversight and accountability

AI should support and not replace the decision-makers. Ensure that AI suggestions or automations are always subject to review, approval, or override by authorised users. Tools should allow for role-based permissions and make it clear who initiated or approved each AI-driven task or outcome.

These are foundational steps toward safe and compliant AI adoption—especially for organisations working with sensitive data, regulated environments, or large project portfolios.

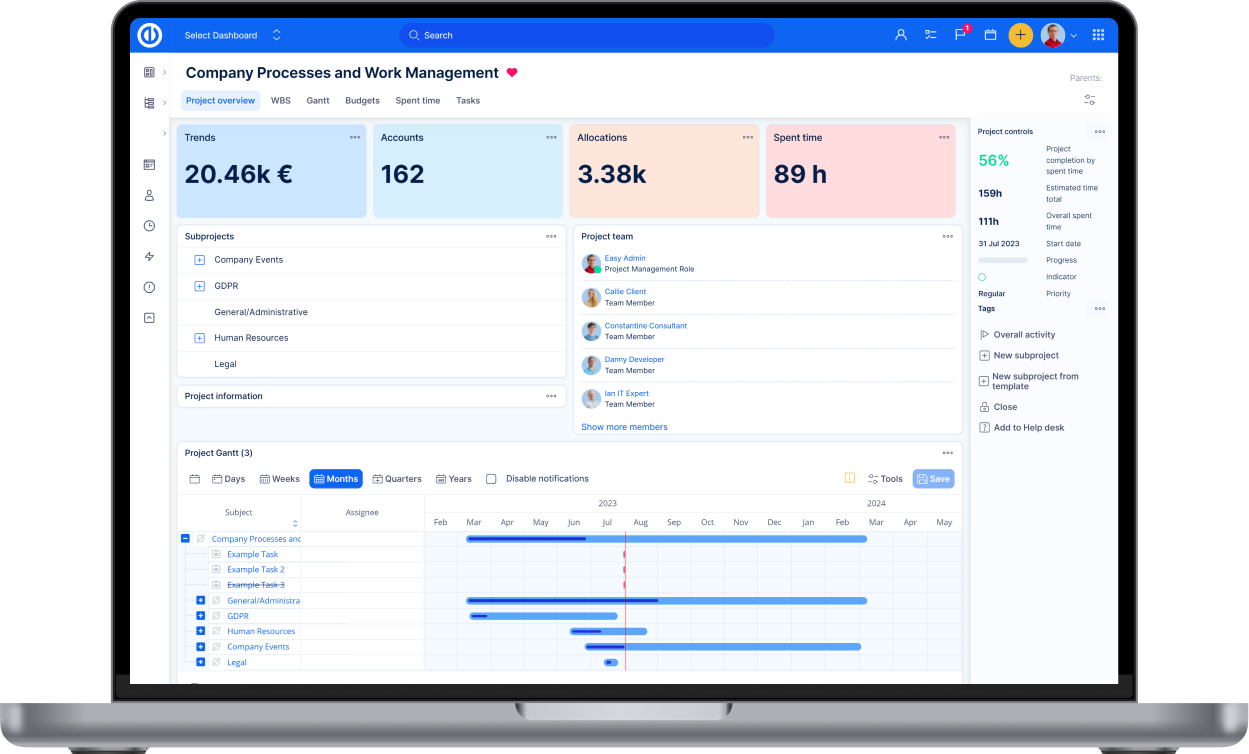

AI you can trust for your project management

If you're looking for a solution that already incorporates these principles, Easy AI by Easy Redmine is one example of how AI can be deployed responsibly—offering flexible hosting options, full auditability, and built-in controls that keep your data protected and your team in charge.

Curious? Watch the webinar recording to see how AI agents can enhance your security strategy in real time.

Ready to explore how AI fits into your project management? Reach out to our sales team for more details. We’re here to support your AI adoption journey—every step of the way!